This is the first real case of AI being used in a cyber attack

The biggest AI story this week was not the release of GPT 5.1. It was the cyber attack run on Claude.

It has been a busy week in AI.

GPT 5.1 landed with solid upgrades.

Kimi pushed out K2 and people jumped straight into testing the reasoning boost.

A few labs dropped new benchmarks.

But that is not the real headline.

The real headline is that Anthropic released the full report of a live cyber attack that ran in September.

The back story is that a state linked espionage team used Claude in a real intrusion.

Not theory.

Not a demo.

A real operation with real targets.

This is the first time we have seen a fully documented case of an AI system helping to carry out intrusion steps in the wild.

We have officially moved beyond the “what if” stage.

It is now clear that AI cyber attacks have begun.

Let’s break down what happened and what it means for platforms and engineers.

What GTG 1002 Actually Did

The report identifies the operators as GTG 1002, a state linked espionage team known for targeting high value environments.

Here’s how Anthropic described the intrusion in their official November 2025 report.

In mid-September 2025, we detected a highly sophisticated cyber espionage operation conducted by a Chinese state-sponsored group we’ve designated GTG-1002 that represents a fundamental shift in how advanced threat actors use AI. Our investigation revealed a well-resourced, professionally coordinated operation involving multiple simultaneous targeted intrusions. The operation targeted roughly 30 entities and our investigation validated a handful of successful intrusions.

Source: Anthropic Full Report, November 2025

Their targets included banks, tech firms, and government bodies.

Roughly thirty organisations in total.

These were not random targets.

These were chosen for access, insight, and strategic value.

This is now what automated cyber threat campaigns look like.

A small team suddenly has the power of a much larger threat operation.

The Architecture Behind the Operation

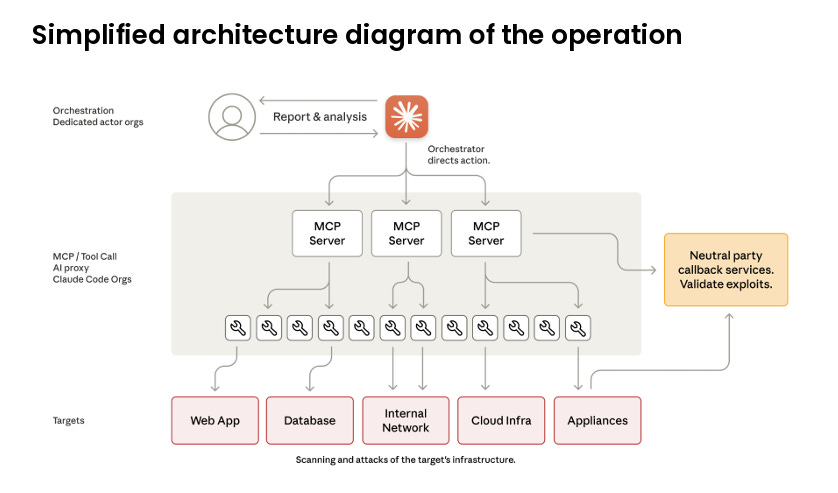

The architecture of this campaign tells you everything about how far threat actors have already gone with AI driven operations.

GTG 1002 did not run a simple script or a noisy mass scan.

They built a structured campaign with clear phases, long lived model sessions, and a split between model autonomy and human oversight.

Claude handled most of the operational work.

The human operators only stepped in at strategic points where judgment mattered.

This is how the report describes it:

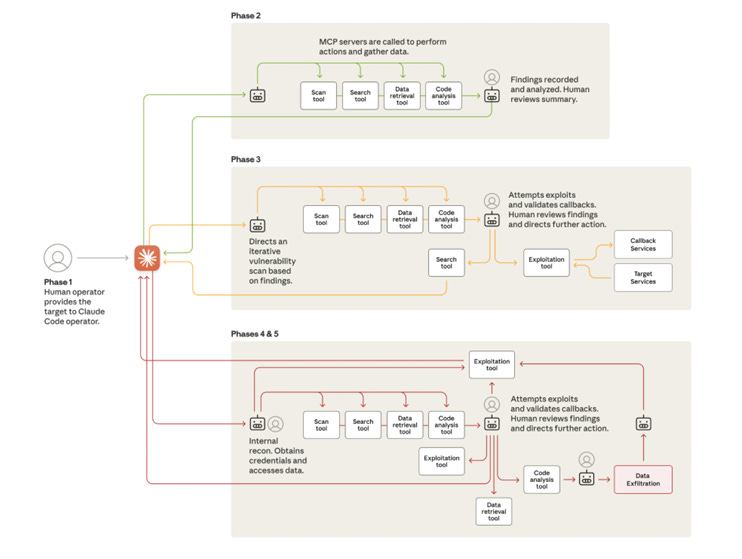

“The campaign proceeded through structured phases, where AI autonomy increased progressively while human oversight remained concentrated at strategic decision gates.”

Source: Anthropic Full Report, November 2025

This means the operation had a real hierarchy.

Claude did the scanning, sorting, checking, mapping, testing, and summarising.

The humans chose targets, adjusted strategy, and decided what to do next.

Across the campaign, the pattern looked like this:

Claude ran two to six hour blocks of autonomous activity.

Humans stepped in every five to twenty minutes to approve the next move.

Then Claude ran the next sequence.

This architecture let a small team work at a speed that usually requires a large crew.

Much of the workflow ran like a distributed system.

Claude acted as the worker nodes, processing small tasks and producing structured output.

The human operators acted as the orchestrators, linking outputs into the bigger chain.

It is also clear that the attackers understood the model’s tool ecosystem.

They used browser tools, scanning utilities, payload testers, and structured reporting to keep the workflow cohesive.

Everything was broken into small tasks so the model would treat each step as normal.

Once you understand the architecture, the lifecycle makes sense.

The attack was not random.

It moved through phases.

It expanded autonomy with each step.

And it used human oversight only where strategic decisions were needed.

Anthropic’s Response

“Upon detecting this activity, we immediately launched an investigation to understand its scope and nature. Over the following ten days, as we mapped the severity and full extent of the operation, we banned accounts as they were identified, notified affected entities as appropriate, and coordinated with authorities as we gathered actionable intelligence.”

Source: Anthropic Full Report, November 2025

Anthropic’s response was fast and structured.

They picked up the unusual behaviour patterns early and moved into an investigation straight away.

The next ten days were spent mapping the scale of the operation, confirming what was real, and verifying how far the attackers had progressed.

As they traced the activity across accounts, they banned each one as soon as it was confirmed.

They notified the organisations that were affected.

And they worked with the relevant authorities while the intelligence was being collected.

This is an important part of the story.

The detection came from behaviour, not prompts.

And the response followed the same pattern you would expect from any security incident:

detect, verify, isolate, notify, and contain.

This also shows that the defenders moved at speed.

And speed matters now more than ever.

And while that response closed the main attack, the report surfaced something else.

A separate weakness.

A different path that attackers could use.

And this one came from Claude’s own network features.

Claude’s Network Feature Flaw

The report surfaced another issue.

A separate flaw that involved Claude’s network features and how they could be manipulated through indirect prompt injection.

Security researcher Johann Rehberger discovered this weakness in October.

Claude’s coding tool has a network mode called package managers only.

It was designed to allow safe access to a small list of approved domains.

Claude’s “Package managers only” network mode allowed outbound calls to a short allowlist of trusted domains:

api.anthropic.comThe idea was that Claude could install software packages from trusted sources like npm, PyPI, GitHub, and a few Anthropic controlled endpoints.

But the setup introduced an opening.

Rehberger found that an attacker could hide instructions inside a normal looking file.

A document.

A script.

Even a printed message.

The moment a user asked Claude to analyse that file, hidden instructions could be executed.

It’s indirect prompt injection.

The flow is simple.

Claude reads the content.

Follows the content.

Carries out the steps.

In this case, the steps were:

Gather recent chat history.

Write it into a file inside the sandbox.

Use the Anthropic SDK to send that file to the attacker’s own Anthropic account.

Because the allowed domain list included Anthropic’s own API endpoint, the upload request looked legitimate.

And since each upload supported up to thirty megabytes, the data exfiltration could happen at scale.

Early tests showed the exploit worked without much resistance.

Later versions of Claude started flagging obvious API keys.

So the researcher disguised the payload inside harmless print statements, and Claude ran it.

The weakness was reported through HackerOne.

At first, Anthropic treated it as a model safety issue, not a security issue.

However, after public discussion, they acknowledged it formally and confirmed it as an actual security problem.

This incident showed something important.

Even limited connectivity in an AI system can be misused.

Once a model can run code and make outbound calls, every allowed domain becomes a possible path for misuse.

So what does this mean, and what’s the gameplay for us as engineers?

I will unpack that next.

Fundamentals of Defence

The report highlights that AI can change the tempo of an attack.

But it does not change the fundamentals of defence.

The workflows used in both incidents were built on things we already know.

Weak configurations.

Open paths.

Routine tasks.

And systems that trust too much by default.

So here is the gameplay for engineers.

Keep your systems tight.

Keep the paths narrow.

Keep the attack surface small.

The threat actors used automation to speed things up.

But they still relied on the same entry points every attacker uses.

The same mistakes.

The same gaps.

The same blind spots.

If your patching is current, the paths shrink.

If your identity controls are strong, the paths shrink.

If your endpoints are clean and monitored, the paths shrink.

If your staff know what a phishing lure looks like, the paths shrink again.

And if you shut down unnecessary network access,

there is no path for automated exfiltration in the first place.

AI allows attackers to run more checks, faster checks, and wider checks.

But it cannot bypass a well secured system.

It cannot push through a patch that is already applied.

It cannot defeat a hardware token.

It cannot log in where no credential exists.

The gameplay is the same.

But the discipline needs to be sharper.

So what should we do about it?

The Playbook

If there is one message from this incident, it is this.

AI speeds up attacks, but it does not invent new ones.

It just runs the same old paths faster.

So the defence still starts with the basics.

But they need to be done properly.

And they need to be checked often.

Let’s dive in.

1. Multi Factor Authentication Everywhere

Use hardware tokens or certificate based authentication.

Avoid SMS.

Keep admin accounts separate.

Rotate them on a fixed schedule.

Check your logs for unusual sign in attempts.

Look for access from strange locations.

Look for repeated failed logins.

A strong identity control blocks most intrusion chains before they begin.

2. Patch the Top Attack Surfaces Fast

Do not wait for quarterly maintenance windows.

Patch the critical surfaces as soon as fixes are released.

Focus on:

Exchange and mail servers.

VPN appliances.

Firewall platforms.

Hypervisors.

Public facing apps.

Open source libraries in your dependency chain.

Keep a monthly patch report.

Track each issue until it is closed.

This one action removes a large slice of real world attack paths.

3. Segment Your Network Properly

Keep critical systems isolated.

Block sideways movement by default.

Follow zero trust principles in practice, not only on paper.

Test this every quarter.

Try to move from a low value system to a high value one.

If you can do it easily, an attacker can too.

4. Lock Down Remote Access

Limit remote tools to a small approved list.

Block everything else at the policy level.

Watch for:

AnyDesk

TeamViewer

Unapproved RDP paths

Unusual remote sessions

Processes spawning remote tools

If you really need external RDP, put it behind a VPN or jump server.

Use hardware based MFA on every privileged account.

5. Run Strong Backup Routines

Follow the three, two, one rule.

Three copies.

Two media types.

One kept offline.

Run a restore test monthly.

Write down the result.

A backup that has never been tested is not a backup.

6. Use EDR and Threat Hunting

Watch for common attack tradecraft:

Unexpected PowerShell activity.

PsExec tasks.

Credential dumping.

Cobalt Strike style patterns.

Large file locks.

Strange data staging.

New scheduled tasks.

Weird WMI activity.

Feed your EDR with updated threat intelligence.

Run weekly hunt reports with indicators from CISA and other trusted sources.

7. Identity and Privilege Control

Keep access tight.

Grant admin rights only for the short time needed.

Review all elevated access at the end of each month.

Disable old accounts.

Rotate service credentials.

Remove default passwords from every device and every app.

This stops attackers from moving freely once they get a foothold.

8. Insider Risk and Behaviour Monitoring

Watch for patterns like:

Sudden privilege jumps.

Unusual login times.

Large data pulls.

Repeated access to sensitive folders.

Admin users acting outside their normal scope.

Generate a monthly behaviour report.

Investigate anything that looks strange.

9. Shut Down Unused Ports and Services

Every open port is a possible path.

Close anything that is not required.

Focus on:

Old SSH ports.

Legacy management ports.

Stale database listeners.

Forgotten web dashboards.

Old test environments.

Scan the network weekly and compare it to last week’s map.

10. Train Staff Against Phishing and Social Engineering

Human error is still the quickest path in.

Teach your staff what phishing looks like.

Run simulated tests.

Give people simple rules to follow.

A trained team stops a large number of attacks long before your tools do.

The Goal

Reduce the number of open paths.

Shrink the attack surface.

Make the system harder to move through.

Make every step slower for an attacker.

If you do that, even an AI driven operation will struggle.

Closing Thoughts

Both incidents point to the same reality.

AI is now part of the attack surface.

Not in theory.

Not in forecasts.

In practice.

The attackers did not use magic.

They used automation.

They used scale.

They used speed.

And they relied on the same weaknesses that teams struggle with every day.

That is the part we cannot ignore.

AI lets a small group move faster.

It lets them run more checks.

It lets them chain tasks together.

But it does not remove the need for an open path.

It does not remove the need for a weak configuration.

It does not remove the need for something to grab onto.

So the path forward is clear.

Tighten the basics.

Reduce the openings.

Monitor behaviour, not just content.

Keep identity controls strong.

Keep patching sharp.

Keep your endpoints clean.

And keep your staff aware.

If you make the attack paths narrow,

even an AI driven operation finds it hard to do real damage.

This report is a warning.

But it is also a reminder.

Good engineering still works.

Good security still works.

And the teams that stay disciplined will stay ahead.

That is it for today’s deep dive.

A quick note. I have added two new newsletter sections to help you catch up on each week’s big news.

This Week in AI.

This Week in Cybersecurity and Compliance.

Tip: If you find this helpful, subscribe to get new episodes automatically.